Much has been said about the new concept of Cloud Computing. There are a myriad of definitions and just as many companies claiming to have a Cloud Computing solution. What is really Cloud Computing, and which solutions will offer you what this new paradigm claims to deliver, are the questions most want to see answered. For starters, let me just state what is obvious for the most experienced who have seen this before, the new thing about Cloud Computing is, its name. During the rest of this entry I´ll walk you through the evolution of a few concepts that lead us to today’s so called Cloud Computing paradigm. Without further ado, let’s dive right into it.

The Industrialization of IT

Information technology has always been about turning computerized systems into a way of getting tasks done faster and in a more reliable fashion. In the last couple of decades this journey has bumped up a notch with the introduction of the object oriented programming, component based software, service oriented architectures (SOA), business process management (BPM) technologies, the internet and its technologies (Web 2.0), and so on. The last step on this long list of technologies, paradigms, and concepts is Cloud Computing. Leveraging on technologies such as virtualization, SOA, Web 2.0, grid computing, etc., Cloud Computing promises greater rates of industrialization of the IT. Making things happen faster, more reliably, and easier to manage is still the main goal of IT today.

Economy of Scale

With the build-up of the industrialization of IT, one inevitable outcome is the appearance of a new economy of scale that will allow IT providers to deliver services cheaper, making IT more like a commodity and less as a burden. Businesses can look at IT more and more as operational costs (OPEX), rather than capital expenditure (CAPEX) which makes a lot more sense for most.

Definition of Cloud Computing

What is Cloud computing after all? There are innumerous definitions of Cloud Computing and also huge disagreements about what it really is and means. So, I´ll try to give you an idea of what it is, hopefully without contributing further to the confusion. In my opinion, one of the reasons why there is a lot of confusionis because there is great mix-up of the concepts, namely technical ande purely conceptual.

Conceptual Definition

According to NIST, National Institute of Standards and Technology, Cloud Computing is:

“Cloud computing is a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.”

This is a good conceptual definition of Cloud Computing that touches its main characteristics, i.e., on-demand self-service, broadband network access, rapid elasticity, resource pooled, measured services.

Today, it is more or less accepted that there are three Cloud Computing models depending on the type of service provided, IaaS, Infrastructure as a Service, PaaS, Platform as a Service, and SaaS, Software as a Service.

IaaS – Infrastructure as a Service

Infrastructure as a Service provides infrastructure capabilities like processing, storage, networking, security, and other resources which allow consumers to deploy their applications and data. This is the lowest level provided by the Cloud Computing paradigm. Some examples of IaaS are: Amazon S3/EC2, Microsoft Windows Azure, and VMWare vCloud.

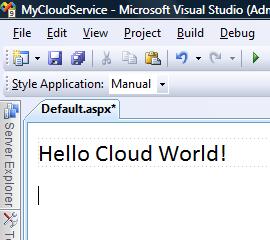

PaaS – Platform as a Service

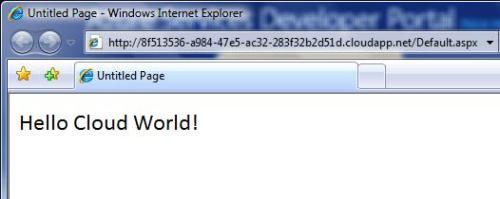

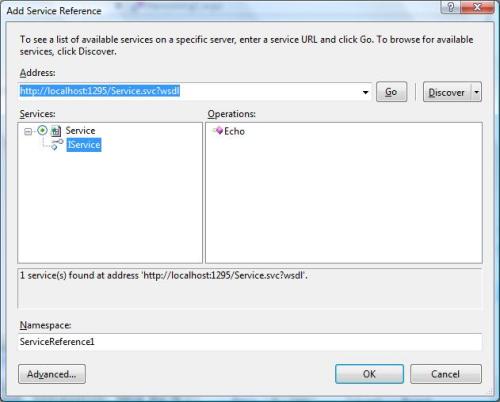

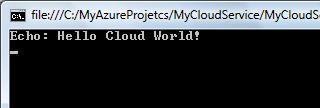

Platform as a Service provides application infrastructure such as programming languages, database management systems, web servers, applications servers, etc. that allow applications to run. The consumer does not manage the underlying platform including, networking, operating system, storage, etc. Some examples of PaaS are: Google App Engine, Microsoft Azure Services Platform, and ORACLE/AWS.

SaaS – Software as a Service

Software as a Service is the most sophisticated model hiding all the underlying details of networking, storage, operating system, database management systems, application servers, etc. from the consumer. It provides the consumers end-user software applications most commonly through a web browser (but could also be though a rich client). Some examples of SaaS are: Salesforce CRM, Oracle CRM On Demand, Microsoft Online Services, and Google Apps.

In reality, there are a number of other models emerging that for some analysts will have a classification of their own, not falling within the models just described. Some examples of these are:

AIaaS – Application Infrastructure as a Service

Some analysts consider this model to provide application middleware, including applications servers, ESB, and BPM (Business Process Management).

APaaS – Application Platform as a Service

Provides application servers with added multitenant elasticity as a service. The model PaaS (Platform as a Service) mentioned before includes AIaaS and APaaS.

DaaS – Desktop as a Service

Based on application streaming and virtualization technology, provides desktop standardization, pay-per-use, management, and security.

BPaaS – Business Process as a Service

Provides business processes such as billing, contract management, payroll, HR, advertising, etc. as a service.

CaaS – Communications as a Service

Management of hardware and software required for delivering voice over IP, instant messaging, video conferencing, for both fixed and mobile devices

NaaS – Network as a Service

It allows telecommunication operators to provide network communications, billing, and intelligent features as services to consumers.

XaaS – Everything as a Service

Broad term that embraces all the models discussed here.

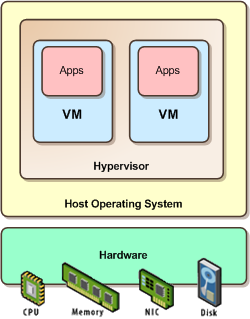

Technical Definition

Trouble usually starts when one tries to add technical concepts to the definition of a paradigm that is, conceptually, above technology. This confusion usually starts with the introduction of virtualization, web 2.0, grid computing, and so on and so forth. This reminds me of innumerous discussions with fellow colleagues, about SOA and Web Services; the former, the concept and the latter, a technology that best applies it. Undoubtedly, virtualization, grid computing, web 2.0, SOA, WOA, etc., are the technology trends that will, for now, fuel the Cloud Computing initiative, but these are ephemerons, and the same concept remains regardless of technology changes.

Cloud Types

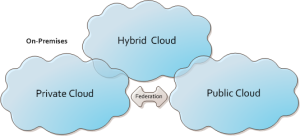

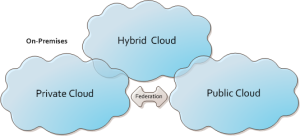

In terms of implementation, there are three major types of cloud deployments; internal clouds, private clouds, and public clouds.

Private Clouds

Private clouds (aka, on-premises cloud) are cloud deployments inside the organization’s premises, managed internally without the benefits of the economy of scale but with advantages in terms of security. This is becoming a new form of architecture for the Datacenter, sometimes mentioned as a Datacenter-in-a-box. VMWare is pioneering this approach, delivering products that will help to implement this type of cloud through their products vCloud, vCenter, and vSphere. VMWare is also leading an effort to achieve standardization for the cloud through the DMFT (Distributed Management Task Force) organization.

Public Clouds

Public Clouds are the original concept of cloud computing based on the ubiquity of the internet. This type of cloud provides all the benefits of the economy of scale, ease of management, and ever growing elasticity. The major concern about this style of deployment is security, and that is the only reason why the other types of cloud deployment have a say.

Hybrid Clouds

Hybrid Clouds are a deployment type that sits between the private and the public clouds. Hybrid Clouds are usually a combination of private clouds and public clouds, usually, managed using the same administration and monitoring consoles (therefore, the importance of cloud standardization).

Conclusion

Much more than the technology that supports it, Cloud Computing is the last plateau of evolution of the IT industrialization process. Looking back at the recent years of the IT industry, it was predictable that something like Cloud Computing would come to revolutionize the IT industry. It seems that for a while, the “tecky” people took over the IT business, always eager to try new technologies, often with little value for the business they were trying to support. Now business is back to claim added value from the IT departments, and Cloud Computing may very well be the answer.